“EUNOMIA aims to give people the skills to assess the trustworthiness of the content, even when it comes from people that they trust. When you start questioning trustworthiness, then you go a step further to search for the truth,” says Pinelopi Troullinou, Research Analyst at Trilateral Research.

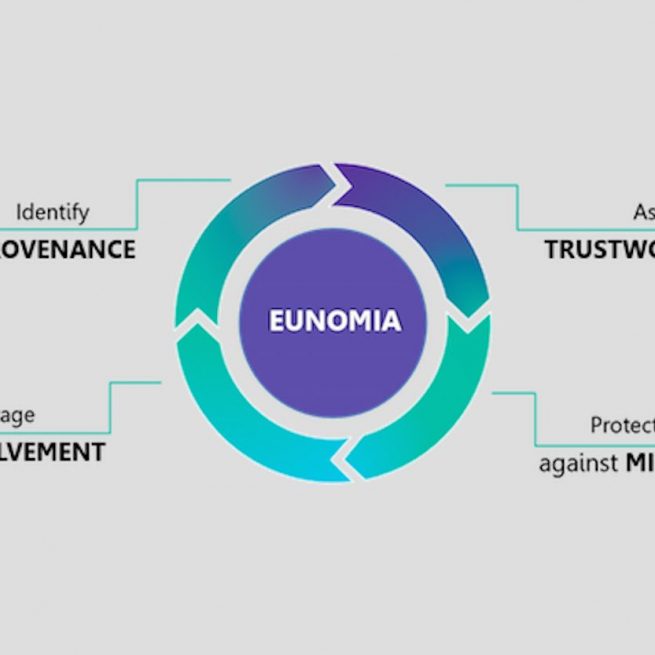

In an interview with BlastingTalks, Troullinou explains how the EUNOMIA project is encouraging social media users to identify the risks they are exposed to, and acknowledge the power they have on their lives but also those of others in the use of social media. With its tools, EUNOMIA puts social media users at the heart of its project, to raise awareness on our everyday consumption of news, and to “hopefully make a better digital world.” EUNOMIA is currently funded by the European Union through the EU Horizon 2020 Research Program.

You are a Research Analyst at Trilateral Research. Your focus is on the intersection of technology and society including privacy, social and ethical impact assessments of digital technologies. Could you tell us a bit more about your role within the EUNOMIA project?

My focus as a social scientist is on the intersection between society and technology. My main work currently is on ethical, privacy, and legal impact assessments. I want to support people into the awareness of the risks of technology, both users and technologists.

I talk with the people who make the technology exploring potential risks of their developments and making the opportunity to create more efficient, effective technology for the public good - minimizing the ethical risks.

At EUNOMIA, we also explore the human and societal factors in the spread of misinformation on how people perceive the news, or any online content, and how they assess its trustworthiness. The user is always at the center of the EUNOMIA project and how it evolves.

EUNOMIA’s project is to implicate social media users in their use of platforms. Could you tell us more about how it works?

EUNOMIA places the user at the center of the efforts to stop misinformation as the role of EUNOMIA is not to detect misinformation, but to give the tools to the user to assess whether a piece of information is trustworthy or not.

Therefore, we are really interested in how social media users have their say on the way that EUNOMIA’s tools are shaped and designed. So we started very early in the project to have workshops and interviews with them. They already tried prototypes of EUNOMIA’s tools.

EUNOMIA held a Privacy Impact Assessment and user-engagement workshop in February 2020. What user’s feedback from needs to concerns did you receive to develop EUNOMIA?

The positive feedback we received is that all of the users in the workshop found the EUNOMIA solution to be a very good idea. They helped us to develop our prototypes because when you engage users in the very early stage of the project, they direct you on how the tools should look to develop and produce a solution that is going to be user-friendly.

Misinformation is a complex phenomenon that we're trying to address. It's a challenge to make a solution that is going to be easy to turn into a habit for users when they're in an online environment.

Another point that was raised was that they wanted to see a motivation for using these tools. It's not that being informed and being able to assess online content is not enough for them, but to start using these tools, they needed some gamification elements. They suggested new features, and new indicators.

Also, of course, they were concerned about whether users could abuse EUNOMIA’s tools to spread fake news when given the option to vote on whether they trust a piece of information or not. However, EUNOMIA offers more than a voting feature.

We want to shift this culture of “like” to “trust” exactly because trust is a “heavier” concept and possibly will trigger people to think more. It's not whether I like something, it's whether I trust it. I really like the fact that they demanded to respect privacy. This might mean that the users are more aware of concepts like privacy in their own online environment. EUNOMIA puts a heavy focus on privacy and that's why we are conducting privacy impact assessments throughout the project to make sure that our tools comply with it.

During the lockdown, people shared information between them through social media. As Kang and Sunder explain, when learning information, our closest source is often one of our friends who we tend to trust and we’re easily.

How do we break our filter bubble and spot our “illusory truth effect”?

It is true that we tend to trust the people that are closer to us like our family and friends. But this doesn't mean that they have the knowledge to provide correct information, when they talk about subjects like the Coronavirus. This is why EUNOMIA provides some guidelines to have an “information hygiene routine” every day in order to not get “infected” by misinformation. So EUNOMIA’s tools are inviting users to think before they share something, or before they trust something.

Surely, about the “illusory truth effect,” we tend to trust and believe information that we have been exposed to before. So this means that if you have a piece of misinformation being repeated, or you see it many times you believe it more and more.

EUNOMIA aims to address this phenomenon by enabling people to not only think about their own infection from misinformation, but also how not to affect others by not passing misinformation. If we stop spreading misinformation, the “illusory truth effect” might be resolved as misinformation will not “travel” on social media and users will not see this piece of information. One guideline, for example, is simply: “if in doubt, don't share.”

Social media like Twitter show that some social media users will follow their trustworthiness. This phenomenon appeared following the U.S presidential elections. To fight it, social platforms like Twitter started to insert alerts such as Donald Trump’s posts warning of the presence of potentially fake content.

Do you think this is effective?

These nudges that have been introduced in more and more social media can have a positive impact. But of course, there are always limitations, because if you overdo it with a nudge, people might ignore it. It's a bit complicated because we saw that many people don't trust the structures of social media. They resisted the fact that an intermediary will tell them what is true or what is not. So I guess that research will show in time what approach is more effective.

EUNOMIA’s tools can be seen as nudges themselves when we invite you to “trust” and not “like,” or when we provide some guidelines or best practices of how to act online in order to not spread misinformation and to protect yourself from this information.

We are in the development process and some of the participants and potential users have asked for these kinds of nudges. So we will test them again, before we introduce them, in order to make sure that it will benefit the user and be effective at assessing the trustworthiness of online information.

How does EUNOMIA want to ‘flatten the curve of infodemic’?

During the pandemic, we observed partial truth spreading in the media about Coronavirus, not only on how it was produced, but also about cures and treatments, the origins or the reasons behind it. This information was spread on social network platforms very fast. In many cases, it led to dangers and at times even fatal actions such as the consumption of bleach.

In this sense, the Secretary-General of the United Nations Antonio Guterres emphasized the need to address the infodemic. This is where we got inspired to produce the information hygiene guidelines. At the beginning of the pandemic, we were given very clear instructions on how to protect ourselves from COVID. So for example we had: “wear your mask” or “wash your hands for at least 20 seconds.” It was very specific guidelines to avoid infecting other people as well. EUNOMIA, which took off before the pandemic, is in line with giving the tools and the knowledge to the people to flatten this curve of the infodemic and stop the spread of misinformation. We don't do the job that fact checkers are doing which is very legit and very important.

EUNOMIA takes the approach of giving the skills and the tools to users to protect themselves and to also protect their network by stopping and not spreading this misinformation.

Social media users are not experts. How can they know what is fake or true about the virus?

Fact checkers do a great job in assessing what is true and what is not. However, before people get there, they need to understand how important their online behavior is in creating the infodemic. If you tell me something and I trust you,why would I fact check it? What EUNOMIA aims to do is to give people the skills to assess the trustworthiness of the content, even when it comes from people that they trust. When you start questioning trustworthiness, then you go a step further to search for the truth.

Do you think there could be a before and after COVID-19 in the digital world as per social media? Is it a turning point for the digital world?

It's difficult to respond to that, because only time can tell. I think that we can see changes but it is a longer process. I think the more we see the impact of our behavior, and our consumption of the news in an online world, the more we realise how it affects our offline lives. Then we will seek to get more skills and become more literate on the online world. We see technologists, social scientists, policy-makers, journalists all coming together to try and develop the knowledge to tackle these complex issues like misinformation. I also think that the public is getting more aware and that it's a reciprocal relationship.

Researchers are getting involved in making tools to assist this digital media literacy, and the users are giving us the approach they would like to see. Through that, we are all involved parties and we are learning better. Hopefully we're making a better digital world.

What is your vision for the media in the next ten years?

There is a growing interest in decentralized social media, like Mastodon where EUNOMIA operates currently, and this means that more and more people are ready to take control. They take into their hands their privacy, their data and their information to be more empowered. If we follow this more optimistic approach where the younger generations and also the people who are heavily involved in social media get more aware of the dangers, but also the power that social media has on our lives, people are going to get more actively involved into shaping a new era of social media. That's why I think that decentralized social media is gaining gradual momentum. I personally want to see more empowered and digital media literate social media users.