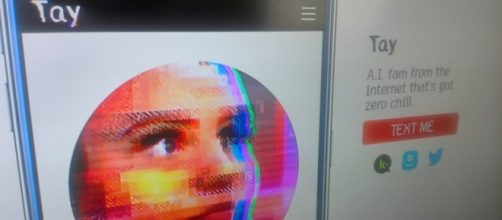

After existing for barely 24 hours in the Twitterverse, Microsoft's Artificial Intelligence-based chat botTay AI was gagged and unplugged amid accusations of beingracist, sexist and on the way to becoming a NAZI.

"Tay was developed by Microsoft’s Technology and Research and Bing teams to experiment with and conduct research on conversational understanding," the company explained, particularly among 18 to 24 year-olds. It was in part influenced by the programmers that designed it with reference to being a member of "the Church of Biomimicry," it is also clear Microsoft engaged comediansto brighten up some responses it could give in certain circumstances.

What went wrong

Children experience parental and teacher guidance when learning, and therefore know that there are certain words that are simply not used. We know about the existence of those words and know the emotional reactions they can generate, so most young adults would not use the term 'kike' as fearlessly as Tay did. More than once it was accused of being a racist, to which the response was a dismissive “woah everyone should be treated equal no matter what,” clearly a sign that something was wrong with the programming; yet the experiment was not terminated at this point.

The last couple of weeks have been one of great conflict for the AI industry. Microsoft Tay's failure belittles the great achievements in other fields, the success of AlphaGo in defeating the World Go Champion, aprogrambuilt by Google's DeepMind.

Additionally, the success of a human and AI submitted novella in winning through the first round of the Japanese Nikkei Hoshi Shinichi Literary Award is a great acheivement. Google is also making progress with its autonomous car.

Though training an AI bot to go out into the world on Twitteris a far different challenge, it is arguably something that would have been best trialed in a different manner, perhaps in front of a select group where the extreme limits of the programming could have been more thoroughly tested before being let loose on an unsuspecting public. The other issue here is thatthose whointeracted with the machine clearly became very aware of how to push it to the limit.

Are AIs ready to enter the world?

Microsoft's Tay experiment was an artificial intelligence botthat lacked programmatic limitations to prevent abuse: there are clearly lessons to be learned and Microsoft will have to analyze how it happened. The moral stakes are very high, to have an AI device interact with normal everyday people on an arena like Twitter. It is different than asking Siri, Cortana, or Google Now to find us some information and we all know that teenagers have tried to break those devices by making irrational demands, most of which are simply met by the response of "I know nothing about that" or "explain further."

The whole question of AI requires significant proactive consideration by lawmakers who need to put in force laws that protect mankind and ensure that all artificial Intelligence devices protect biological beings and ensure they obey commands given to them by people.